B - Standard Unreal Engine Post Processing Pipeline

Unreal Engine has a sophisticated post processing pipeline. Since this pipeline is very complex, it has its own node based processing system to do these processes. It is regenerated every frame, according to rendering parameters. For example, at one frame the bloom might be turned off (Bloom intensity set to 0), the other frame it might be turned on. Unreal Engine prepares its node-graph every frame, in order to optimize its processing speed.

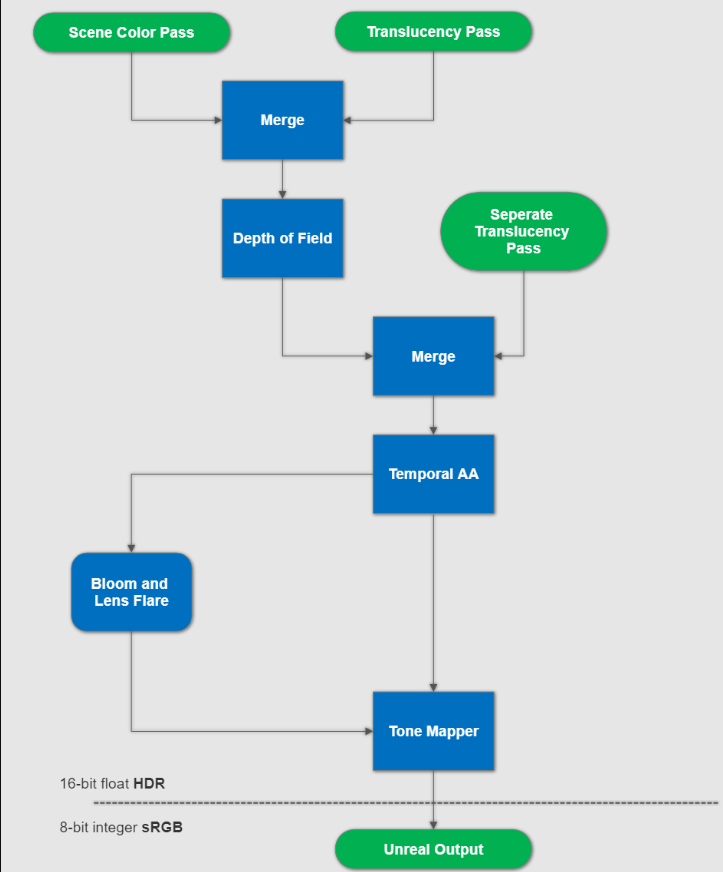

“Translucency pass” is composited on top of “Scene Color pass” at the very early steps. Afterwards the depth of field is processed and “Separate translucency” is merged later on top of this image. This is why materials using “Separate translucency” are not affected by depth of field effect while standard translucent mate-rials are. Thus, separate translucent materials don’t look photo-realistic, since they do not match to the physical camera focus.

Separate translucency is also sometimes named as transparency after DOF. Using this type of translucent materials in a Virtual Studio environment will look fake, when used with Depth of Field.

Temporal anti-aliasing is applied after depth of field. “Temporal AA” is a very special anti-aliasing technique, which is very efficient in utilizing GPU hardware. We strongly suggest that you to use this anti-aliasing method for best quality results.

If the lens flares and/or bloom is effective in your post process settings, the bloom and lens flare passes will be calculated using anti-aliased image buffer. Bloom and lens flare buffers are merged and delivered into “Tone Mapper” pass. This pass first adds bloom / lens flare buffer on top of anti-aliased image, then tone maps the image from “linear gamma” space to “sRGB” color space.

Reality gives the option to output 16-bit HDR images from Unreal Engine’s “Tone Mapper”, while giving the option to bypass it. This gives a linear gamma output from Unreal Engine and gives higher bit depths for video output.

Reality can input “logarithmic encoded HDR” SDI video and convert it to “linear gamma” floating point HDR. Do all the processing in floating point precision and output back to SDI using logarithmic encoding. One example would be using “Arri Alexa/Amira” camera’s “LogC” encoding.